The Accountability Sink: Why The Push For AI Agents Is Creating An Invisible Crisis of Risk

The "Ghost" in the Logic: Why AI Agents Are Creating an Invisible Crisis

THE GHOST IN THE LOGIC

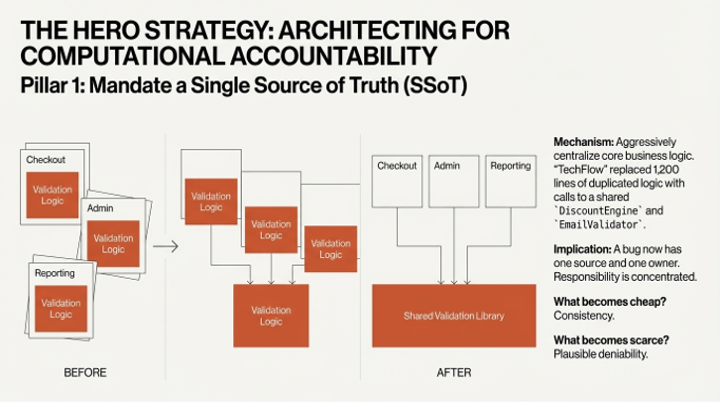

Sarah, a developer, leans closer to her screen, tracing the lines of code that connect three different services. Checkout. Admin preview. Reporting. All three are supposed to calculate customer discounts, but each does it differently. It’s a quiet, insidious rot. The checkout service correctly handles edge cases like “Leap year discounts” and complex compound calculations. The admin preview gets some of it right. The reporting service handles none of them. For months, the company’s financial reports have been wrong. Accounting, she discovers, “has been manually reconciling the differences every month, thinking it’s just rounding errors.”

This isn't a bug. It’s a symptom. It’s a systemic breakdown where no single person is at fault, yet the entire system is failing—a quiet diffusion of responsibility that manifests as a rounding error. This is the ghost in the logic, a phantom born from organizational drift and technical debt. What happens when this kind of logical rot infests not just discount codes, but the AI agents making decisions about healthcare, finance, and safety?

THE STOCHASTIC EDGE

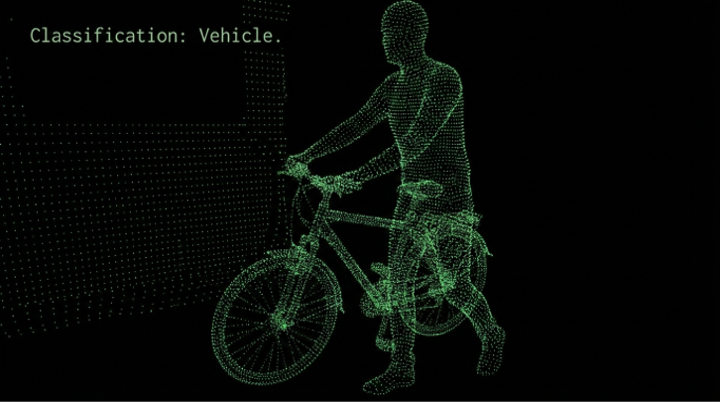

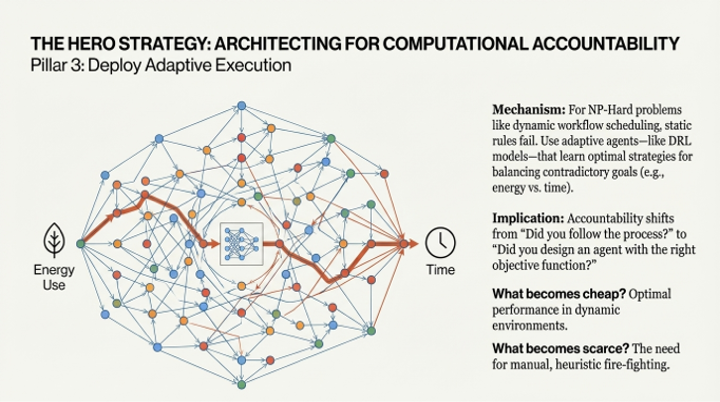

We are in a moment of profound transition. We are moving from passive tools to agentic AI—systems that don’t just answer queries, but plan, execute, and iterate complex workflows on our behalf. These agents are being deployed not into the clean rooms of centralized data centers, but onto a chaotic and decentralized frontier of Edge Computing, IoT, and Fog networks.

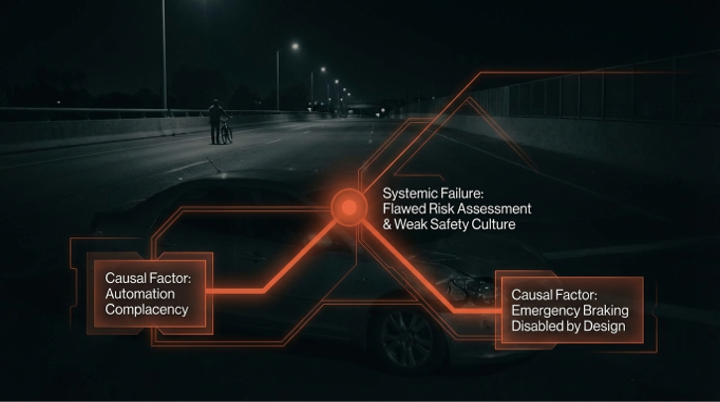

This is the “Stochastic Edge”—a massively distributed ecosystem of "resource-constrained" and "battery-driven" devices where resource management is a monumental challenge. Here, attack surfaces multiply with every new sensor, and the environment is rife with specific threats like "spoofing and jamming" attacks designed to feed counterfeit data to an agent or block it from receiving authentic information. In this environment, responsibility becomes dangerously diffused. So many human and machine actors contribute to a single outcome that it becomes impossible to determine who is truly responsible when something goes wrong. This is the classic "problem of many-hands," no longer just an organizational challenge but a technical architecture problem at a planetary scale. When an AI agent fails, who is to blame? The developer? The operator? The data provider? The user? Or does the fault simply vanish into the system's complexity?

THE VILLAIN OF VICARIOUS LIABILITY

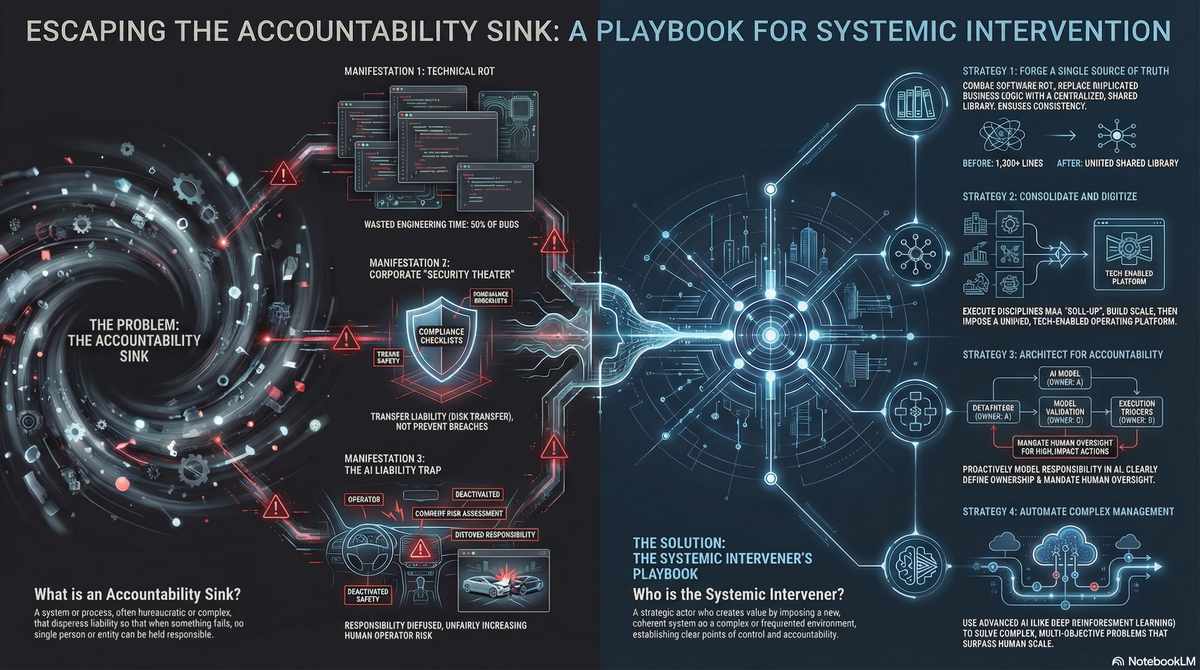

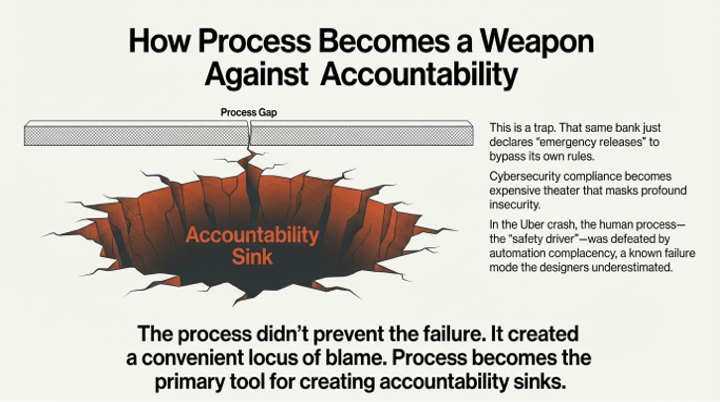

The central villain of this new era is a comforting corporate lie: The Misconception of Managed Accountability. This is the belief that accountability is merely a risk to be managed, a box to be ticked, a liability to be insured and audited away, rather than a core property to be engineered into the system itself.

Smart leaders fall for this lie because it is, in some contexts, a rational strategy. In the corporate world, cybersecurity and AI safety have become theaters of "liability management," akin to "how maritime shipping handles accidents at sea." The strategic goal is not necessarily to be secure, but to be defensible in the event of a failure. The logic is seductive: "we've implemented all best practices, contracted out the hard parts... there was nothing more we could do, therefore it's not our fault." This reduces complex engineering challenges to satisfying auditors with checklists. It creates perverse incentives to adopt generalist AI tools whose performance on abstract benchmarks is more legally "defensible" than the "messy reality of last-mile implementation"—even if they fail catastrophically in specific, real-world contexts.

THE HERO STRATEGY: ENGINEERING ACCOUNTABLE AGENCY

The counter-strategy is not another policy or compliance framework. It is an engineering discipline we call Accountable Agency. The mission is to make responsibility explicit, traceable, and a fundamental feature of a system's design. This strategy has four pillars.

Pillar 1: Frame with Principal-Agent Theory

The first step is to adopt the correct mental model. We must stop talking about AI as a magical oracle and start analyzing it through the rigorous lens of principal-agent theory. In this model, the AI is an "agent" acting on behalf of a human "principal." This framing forces a disciplined analysis of "goal alignment, information asymmetry, and incentive structures." It moves the conversation from abstract ethics to concrete engineering and requires leaders to explicitly define the "boundary conditions under which decision-making can be reliably delegated" to an AI system.

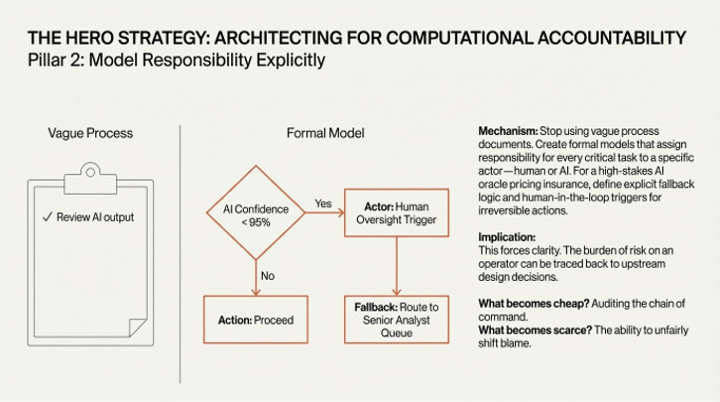

Pillar 2: Architect for Delegation, Not Autonomy

The objective should not be absolute autonomy, but controlled, delegated autonomy. The healthcare sector provides a powerful model. Instead of a one-size-fits-all approach, AI-driven diagnostics can be routed into three distinct pathways: "AI only" for low-risk cases, "pathologist only" for complex or sensitive cases, and "pathologist and AI" for collaborative analysis. To do this safely, a system's characteristics—its "adaptiveness," "independence from oversight," and "breadth of functionality"—must be classified using a formal framework like INSYTE. This allows organizations to identify where harm is "unusually likely" and where human intervention must be non-negotiable.

Pillar 3: Codify Responsibility with Assurance Cases

To make accountability concrete, we must make it auditable. "Assurance cases" provide a "primary requirement for demonstrating compliance with national and international standards" by structuring claims, arguments, and evidence. Responsibility can be moved from a vague concept to a provable statement using formal notation, such as Actor(A) is (type) Responsible for Occurrence(O). This defines precisely who is responsible for what. These codified relationships can then be embedded in smart contracts, creating an immutable, trusted ledger of actions and decisions across a decentralized network.

Pillar 4: Design for Selfish Utility

Finally, we must confront the human factor. End-users exhibit what can be called the "Invisible Hand Brake"—a perfectly rational resistance to adopting systems that increase their personal risk or workload without providing immediate, tangible value. The solution is not top-down mandates, but "mechanism design." We must build systems that provide immediate, "selfish value" to the user, thereby aligning their rational behavior with the organization's strategic goals. This creates genuine adoption, not just coerced compliance, and necessitates new roles, like the AI Risk-Mitigation Officer, whose job is to ensure this alignment is built into every system from day one.

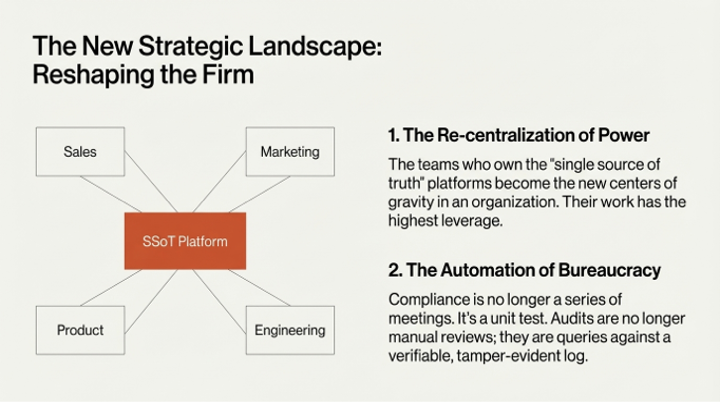

SECOND-ORDER EFFECTS: THE NEW STRATEGIC ASYMMETRY

Adopting Accountable Agency is not merely a defensive posture; it creates a powerful strategic asymmetry.

- Who Wins: Organizations that engineer for accountability gain a decisive advantage in resilience and trust. Frontline operators are empowered and, critically, protected from becoming "liability sinks," where they are forced to absorb the blame for an AI's failure without having the control or understanding to prevent it.

- Who Loses: The losers are the incumbents stuck in "compliance theatre" and the technology vendors competing solely on abstract benchmark performance. These players are optimized for a world of plausible deniability, a world that is rapidly evaporating.

- What Becomes Cheap: With designed-in accountability, forensic analysis and auditing become radically cheaper and faster. Root-cause analysis shifts from a months-long archaeological dig to a simple query. This "accelerates the deployment of AI in high-stakes, regulated industries where trust is the primary currency."

- What Becomes Scarce: Plausible deniability. It becomes nearly impossible to hide failures within the fog of the "problem of many-hands" when responsibility is explicitly coded into the system's architecture.

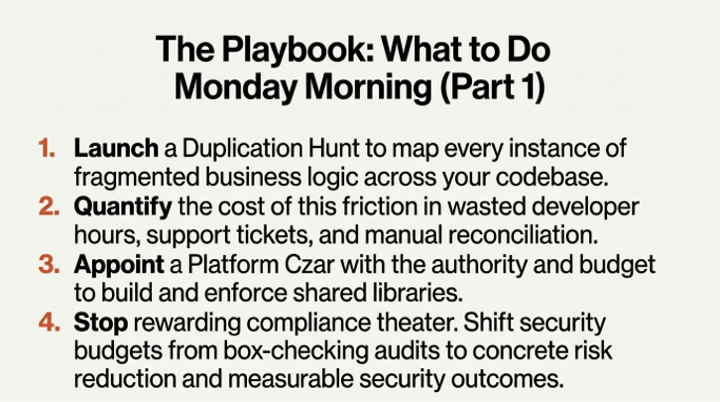

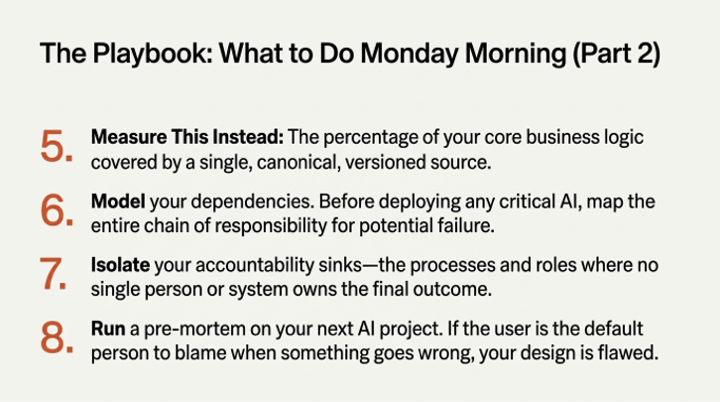

THE PLAYBOOK: EIGHT ACTIONS FOR MONDAY MORNING

For leaders, founders, and policymakers, the time to act is now.

- Map your most critical AI system using a principal-agent framework to identify hidden risks and misaligned incentives.

- Conduct a "responsibility audit" using a RACI matrix to discover and close accountability gaps in your team.

- Stop treating AI ethics and safety as a compliance function designed merely to manage liability.

- Measure system adoption by its "selfish utility" to the end-user, not by top-down mandate compliance.

- Reframe AI-driven labor savings as "labor redeployment" in your business cases to secure buy-in from operational veto-holders.

- Appoint a cross-functional team to classify your agentic systems using a formal framework to understand their true risk profile.

- Integrate an explicit governance and responsibility plan into every AI project charter from day one.

- Mandate that every AI agent workflow includes explicit, auditable escalation paths and PHI/PII handling protocols.

THE ORGANIZATIONAL TREATY

Back in Sarah’s codebase, the crisis that started with faulty discounts is being resolved. The investigation revealed that the rot ran deep, with duplicated logic for address validation, user authentication, and more scattered across the system. The fix is more than just good engineering; it is an "organizational treaty." By extracting the logic into a single, shared library, the teams are creating a pact—a re-establishment of common ground and shared responsibility. This isn't just a technical problem being solved; it's an "organizational problem," born from a culture where "teams didn’t know what shared utilities existed." Building the future of agentic AI will require fewer black boxes and more of these engineered treaties—explicit, auditable, and binding pacts that connect our systems, and ourselves, to a clear standard of accountability.