The Economics of Model Commoditization: The Smiling Curve of Intelligence

The “Smiling Curve” of AI: Why Models Are Worth $0

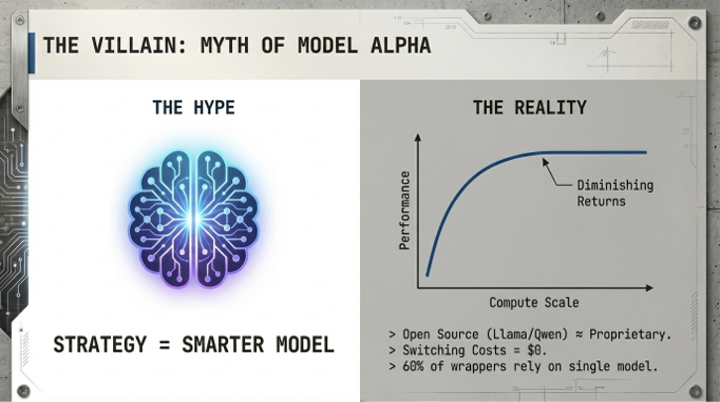

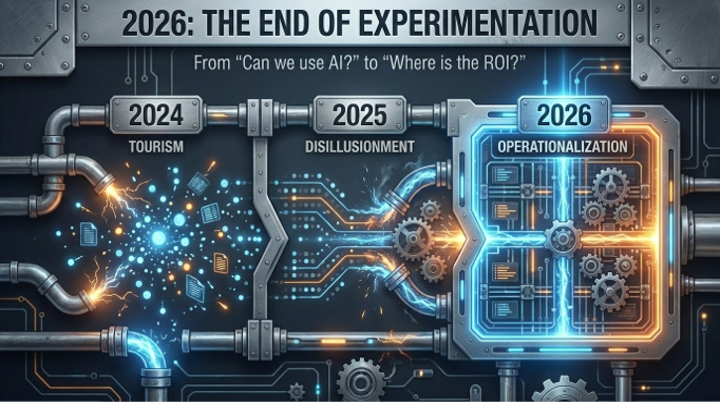

In 2024, the 'alpha' was in the model. Having access to GPT-4 was a competitive advantage. In 2026, intelligence is a utility—like electricity or bandwidth.

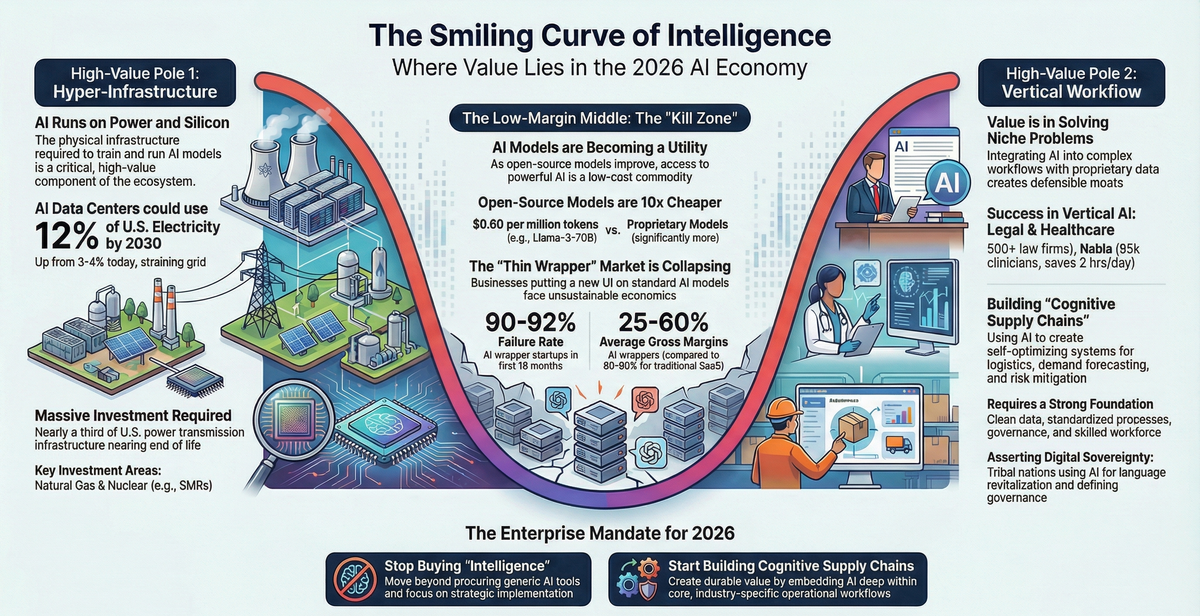

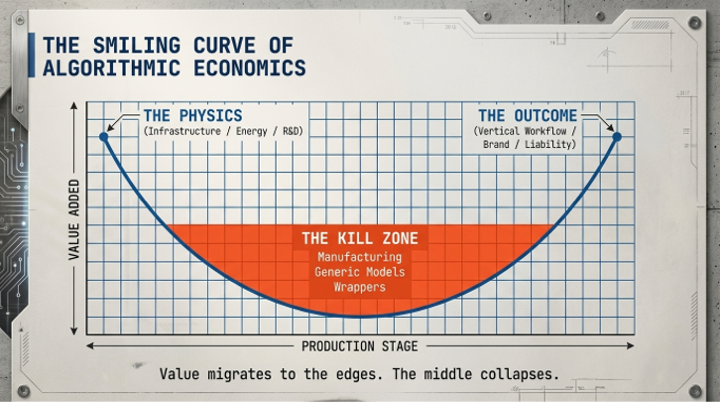

This is a predictable systemic response to a resource shock: when a foundational input becomes abundant, its price crashes and its economic value migrates to the edges of the value chain. This transformation is now reshaping the artificial intelligence economy, a dynamic best understood through the framework of the "Smiling Curve." The middle of the curve has collapsed into a low-margin kill zone, occupied by general-purpose model providers and the thin application wrappers built upon them.

Value is fleeing to two opposite poles. The first is Hyper-Infrastructure: the physical foundations of AI, from the silicon and data centers to the energy and grid access that now represent the ultimate scarcity. The second is the Vertical Workflow: the deep integration of AI into proprietary, domain-specific systems that leverage an organization’s unique tribal knowledge.

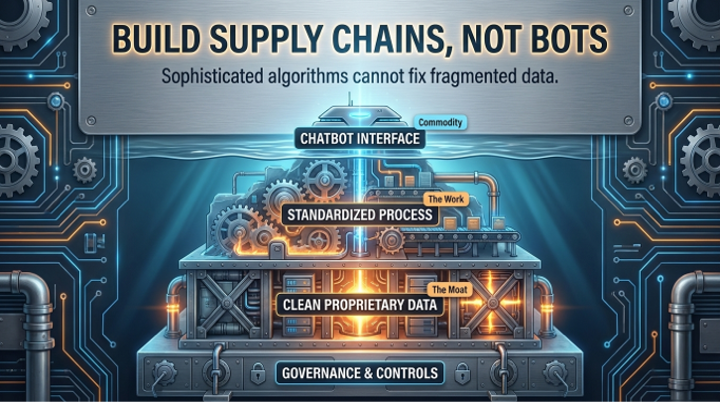

For the modern enterprise, this shift renders the old strategy obsolete. The new mandate is clear: Stop buying "intelligence." Start building "cognitive supply chains."

--------------------------------------------------------------------------------

The Great Deflation: How Intelligence Became a Commodity

Looking back from 2026, the crisis that culled the AI startup landscape was a period of Great Deflation. Between 2024 and 2026, the cost per million tokens for frontier models collapsed, driven by two forces: massive efficiency gains in inference architecture and a hyper-competitive market where providers sacrificed margins to capture long-term ecosystem lock-in. The price war was swift and brutal.

Provider / Model Tier | 2024 Pricing ($/1M tokens) | 2026 Pricing ($/1M tokens) | Pricing Trend |

OpenAI Flagship (GPT-4/5) | Input: $10.00 / Output: $30.00 | Input: $1.25 / Output: $10.00 | ▼ ~87-90% |

Google Mid-Tier (Gemini Pro) | Input: $1.25 / Output: $10.00 | Input: $0.15 / Output: $0.60 | ▼ ~94%+ |

Anthropic (Claude Opus/Sonnet) | Input: $15.00 / Output: $75.00 | Input: $3.00 / Output: $15.00 | ▼ ~80% |

Open-Source (Llama/DeepSeek) | Input: $0.60 / Output: $0.70 | Input: $0.28 / Output: $0.42 | ▼ ~40-55% |

This trend was accelerated by the emergence of extremely cheap "mini" and "nano" model variants, with prices falling into the $0.05 to $0.10 per million token range, making them functionally free for many enterprise use cases.

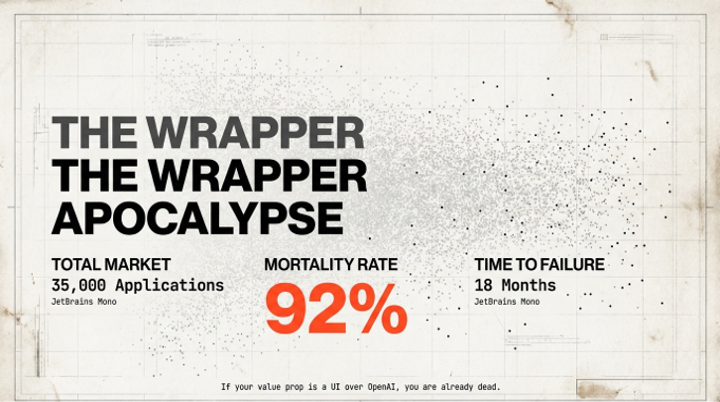

The consequence of this price collapse was the decimation of business models built on reselling commoditized intelligence. "Thin wrappers"—applications whose primary value was providing a user interface for a major foundation model—faced an extinction-level event, with a 90-92% failure rate for AI startups within their first year that lacked defensible depth. If the raw cost of cognitive labor is negligible, profit must be generated elsewhere.

The Smiling Curve: Where Value Fled

The Smiling Curve provides the map for this value migration. As the profitable center of the AI economy dissolved, value became highly concentrated at two opposite poles of the production chain in a classic feedback loop of commoditization and scarcity.

The Left Pole: The Hyper-Infrastructure Scarcity Stack

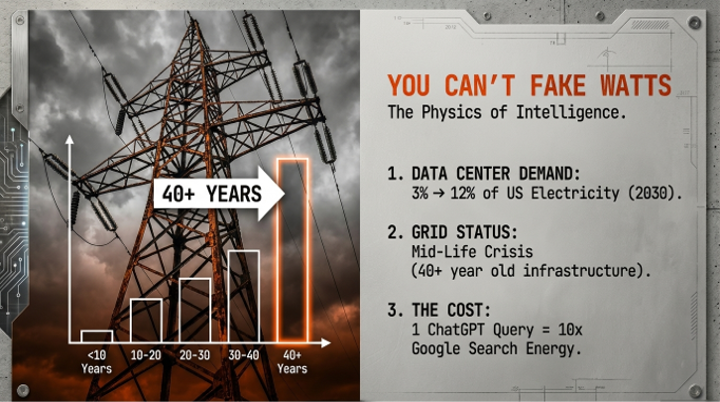

The first new center of value is the physical foundation of AI. As the model layer commoditized, value flowed predictably to the next-most-scarce layer below it, a dynamic common in complex adaptive systems. This "Scarcity Stack"—a hierarchy of assets that cannot be easily commoditized—includes chips, data centers, and, most critically, the energy and grid access that have become the greatest gating factor of the intelligence revolution.

This has ignited a capital expenditure supercycle, with hyperscalers like Microsoft, Google, Amazon, and Meta projected to invest over $527 billion in capex in 2026 alone. This spending is increasingly directed at solving a looming energy crisis. Data centers are projected to consume 11% to 12% of total U.S. electricity by 2030, a dramatic rise from 3-4% today. This surge has collided with an aging, underfunded electrical grid, forcing a strategic pivot to secure reliable, base-load power. Hyperscalers are now striking long-term, multi-decade nuclear power contracts, such as AWS's agreement to acquire a data center campus adjacent to the Susquehanna nuclear plant, to guarantee the 24/7 energy supply their AI infrastructure demands.

The winners at this pole are clear: the hyperscalers who own the infrastructure, the power companies who supply the energy, and the data center operators who build the facilities. The new scarcity is no longer the model, but a connection to the grid and a guarantee of reliable power.

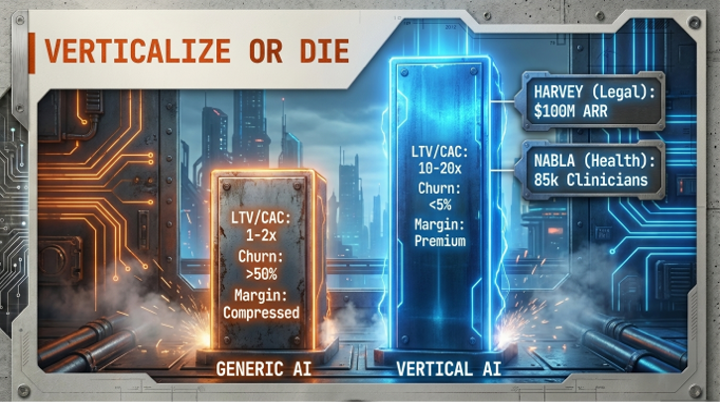

The Right Pole: Vertical Workflows and Tribal Knowledge

The second pole where value has concentrated is in deep, domain-specific integration. Sustainable competitive advantage is no longer found in accessing a powerful model, but in building superior applications that solve specific, high-value, real-world problems.

The ultimate defensive moat in this new economy is "tribal knowledge"—the proprietary data, nuanced expertise, and unique operational context that cannot be replicated by general-purpose AI. A perfect illustration is the domain of American Indian tribal court practice. AI struggles with unsettled, culturally unique, or indeterminate law, such as tribal common law. The corpus of written law is small, and its application requires deep cultural context. This makes it a high-value domain where human expertise and specific, non-fungible knowledge create a defensible position that a generic model cannot assault.

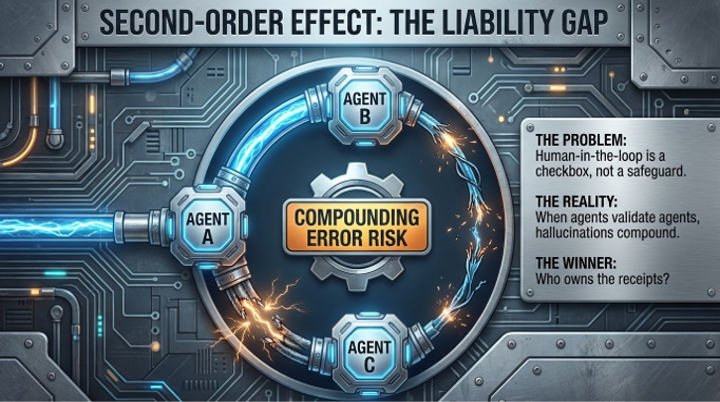

But executing on this tribal knowledge requires more than just data; it demands a "trust infrastructure"—the "passport system" for AI agents that makes a cognitive supply chain enterprise-grade and defensible. This critical layer encompasses the governance, audit trails, and permissioning that allow AI to act on consequential decisions. Without it, an enterprise cannot deploy agents for anything meaningful, as the risks of unmonitored action are too great.

This shift has changed the enterprise mandate from buying "intelligence" to building "cognitive supply chains"—autonomous networks that sense, interpret, learn, and act upon data across traditional organizational boundaries.

Capability | Definition in AI Context |

Sensing | Real-time port traffic and weather disruption tracking |

Learning | Improving freight invoice interpretation without manual updates |

Acting | Automatically securing container space before rate spikes |

The winners at this pole are enterprises with proprietary data, deep domain expertise, and the discipline to build both the cognitive and trust infrastructure for their core workflows. The new scarcity is defensible depth and operational integration.

--------------------------------------------------------------------------------

The Turn: Defeating the Villain of "AI Tourism"

The strategic pivot required for 2026 is a turn away from the comforting lies and bad institutional habits that defined 2025.

The villain of this era was the misconception that "AI transformation is a technical challenge." This belief fueled a failed strategy of endless "AI experimentation" and the creation of thin wrappers, leading to a massive "Value Realization Gap." This gap arose because organizations bolted AI onto fragmented data and unstandardized processes, causing AI projects to consistently fail to show measurable ROI. The payback period for enterprise AI stretched to an unsustainable 2-4 years.

The hero is the new, actionable strategy for 2026: Stop buying intelligence. Start building systems. This approach frames AI not as a feature to be added, but as foundational infrastructure, much like finance embedded ERP systems two decades ago to become the digital backbone of the enterprise.

A powerful historical parallel illuminates this new playbook. The current moment is not another dot-com bubble—a first-wave revolution driven by speculative startups. It is a second-wave revolution like electrification, driven by established giants with "fortress balance sheets." Consequently, the biggest winners of the electrification revolution were not the power plant builders. They were companies like Sears, which leveraged the already-built railway system to create a brutally efficient mail-order business, and Ford, which used electricity to redesign the factory floor and build the assembly line. They didn't sell electricity; they used it to build vertically-integrated systems that crushed their competition. This is the mandate for AI.

Case Study: Apple's Walled Garden in the Kill Zone

A significant outlier that has successfully navigated the low-margin kill zone is Apple. While operating in the "middle" of the Smiling Curve—providing AI-enabled services directly to consumers—Apple has avoided commoditization by designing a complete, vertically-integrated system. Its strategy rests on three pillars:

- The Distribution Edge: Apple controls the operating system. It doesn't need to persuade users to download an app; it embeds intelligence directly into the system layer with "Zero Friction." A single software update, like the rumored iOS 26.4 (as reported by industry outlets like Bloomberg), can make hundreds of millions of devices AI-native overnight, an instant scale no competitor can match.

- The Privacy Moat: Apple's Private Cloud Compute (PCC) architecture creates a trusted environment for AI. By handling most processing on-device and using a secure cloud for more complex tasks, Apple can scale powerful AI features without compromising its core, privacy-first brand promise.

- The Hardware Super-Cycle: Advanced AI is computationally demanding. By making new AI features exclusive to its latest hardware, Apple has created a powerful, built-in incentive for users with older devices to upgrade. Running state-of-the-art models locally forces a massive hardware upgrade cycle, directly linking AI adoption to hardware sales.

Apple’s success reinforces the central thesis: the value is not in the raw model. It is in the design of the entire systemaround it—from the silicon to the software to the services that bind them together.

5. Conclusion: The Mandate for 2026

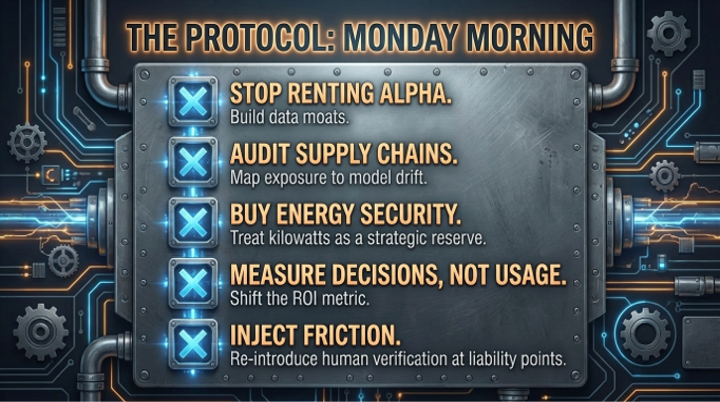

The arbitrage is over. The alpha in artificial intelligence has decisively migrated from the model to the system. The 2026 economy is defined by the new laws of strategy that emerge from this systemic shift: a radical deflation in the cost of raw intelligence and a corresponding concentration of value at the physical and specialized ends of the Smiling Curve. To navigate this new landscape, the mandates for the enterprise are not optional recommendations but the only viable responses to these inexorable pressures.

Forge Cognitive Supply Chains. Shift investment from commoditized model access to the proprietary integration of your organization’s unique tribal knowledge.

Master the Physics of Intelligence. Recognize energy and grid access as the ultimate constraints and build a resilient power strategy to underwrite future growth.

Weaponize Operational Metrics. Abandon speculative 'AI tourism' for governance by design. Mandate that all AI initiatives prove their value through hard metrics like cycle-time compression and decision latency reduction.

As intelligence becomes a utility, what is the next civilizational inefficiency that these new cognitive supply chains will weaponize?